My role: UE5 virtual production technician, VFX Artist, Environmental Lighting Artist, Cinematographer

Abstract

Burrata Virtual Production is a 14-week discovery project aimed at exploring and evaluating various virtual production technologies to establish a practical pipeline for CMU’s Entertainment Technology Center (ETC).

As the first Virtual Production studio at ETC, our objective is to lay the groundwork for future students to create virtual production projects.

While our technology may not match industry standards, we aim to build a pipeline that mirrors industry practices and is adaptable for future advancements. By providing working demo clips and a sample scene, we intend to validate the effectiveness of our pipeline in producing high-quality VP projects.

Traditional Production vs. Realtime Virtual Production

Traditional film production is a linear process that moves from pre-production (concepting and planning), through production (filming) and finally to post-production (editing, color grading, and visual effects).

In contrast, virtual production merges each production phase and the final result, enabling directors, cinematographers, and producers to see a representation of the finished look much earlier on in the production process, and therefore iterate quickly and inexpensively.

In Camera VFX Pipeline Set-up:

We researched the industry pipeline and found a widely use example:

The switchboard control center launches multiple unreal instances on different machine

and the version control service keeps them coordinated under the same network.

and the version control service keeps them coordinated under the same network.

The Editor Instances (editor mode) controls nDisplay Instances (play mode) real-time. And the images on different nDisplay Instances are rendered on different displays on the LED Volume.

When we were setting up this pipeline

We found compatibility issue between our Vive trackers and the switchboard control panel.

So we found the right engine version that support local preview (5.4) which override switchboard control.

We found compatibility issue between our Vive trackers and the switchboard control panel.

So we found the right engine version that support local preview (5.4) which override switchboard control.

Test-Shooting with ETC Cavern (720-degree Projection Screen)

Test-shooting with LED Television Screen

ICVFX Shoot Floor Plan In ETC Cavern (720 Degree Projection Screen)

On-site Virtual Production Filming:

Here documents our on-site filming process. We were able to match physical lights and virtual backdrop and blend them together by real-time color grading inside the Unreal Engine Editor.

Reflection capturing that adds up believability of the shot image. Greenscreen is not able to achieve this.

Environmental Lighting And Rendering Work

To achieve better believability of the virtual background and physical set, I iterated on the environmental lighting and rendering techniques.

Before

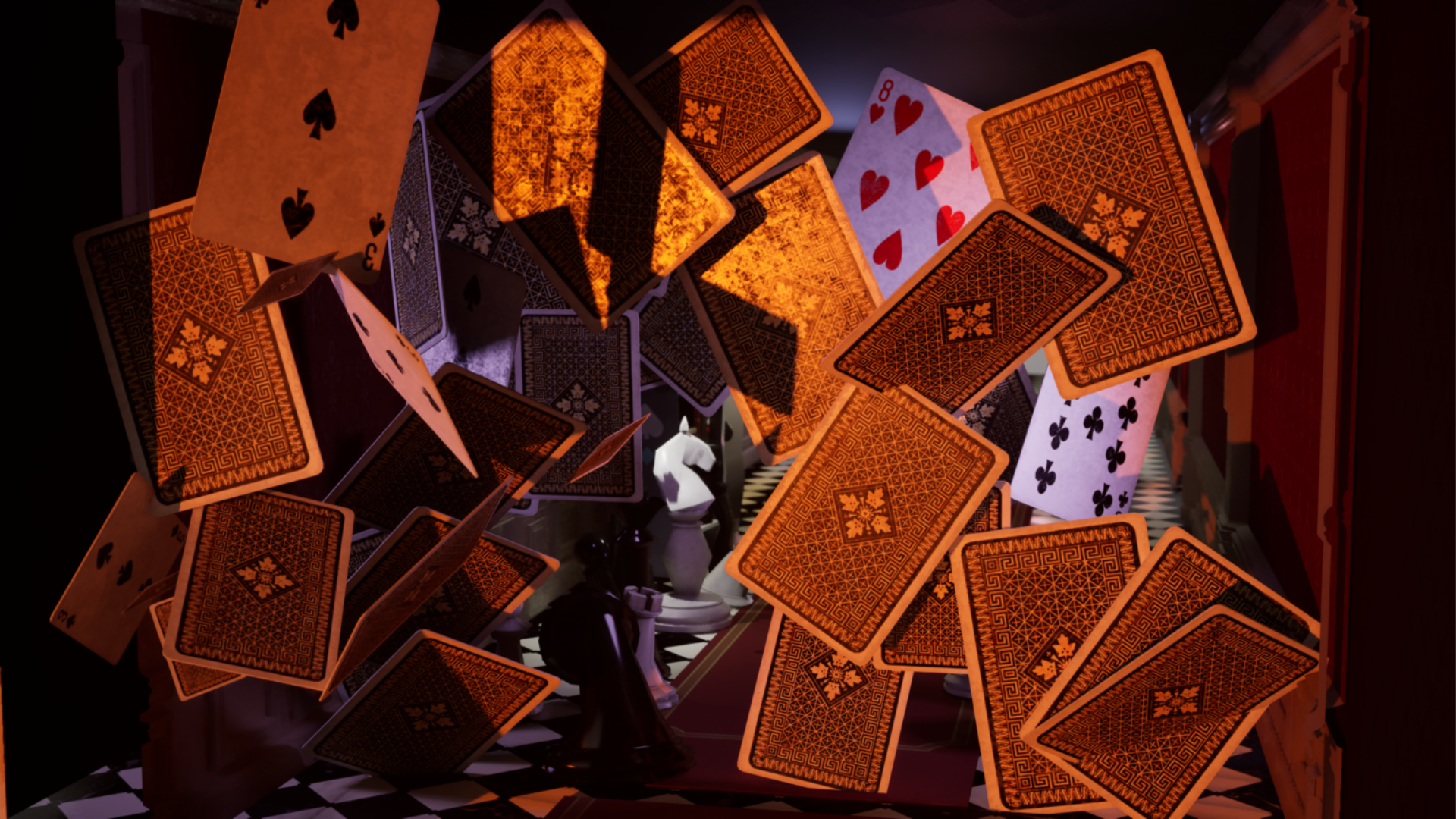

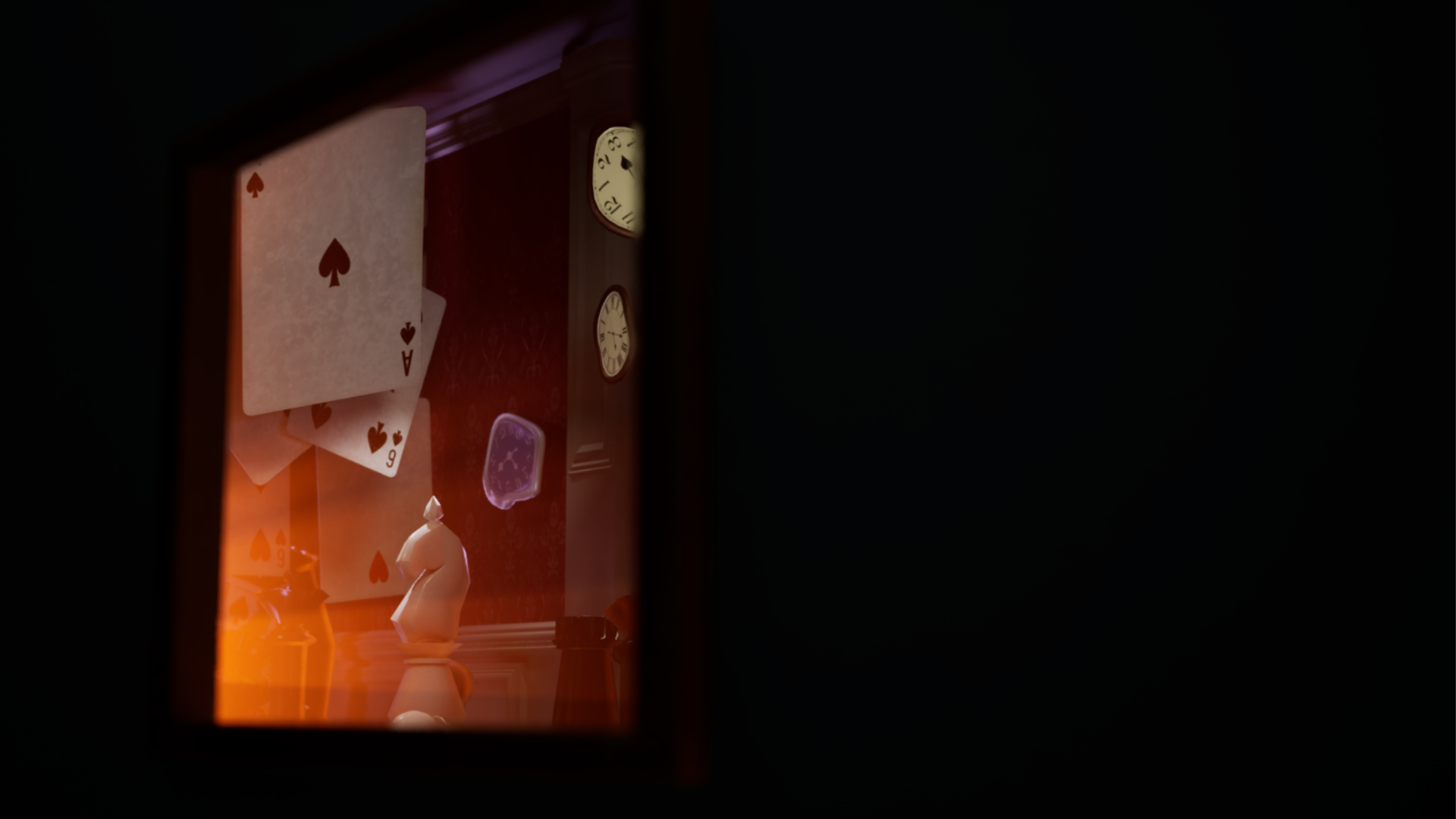

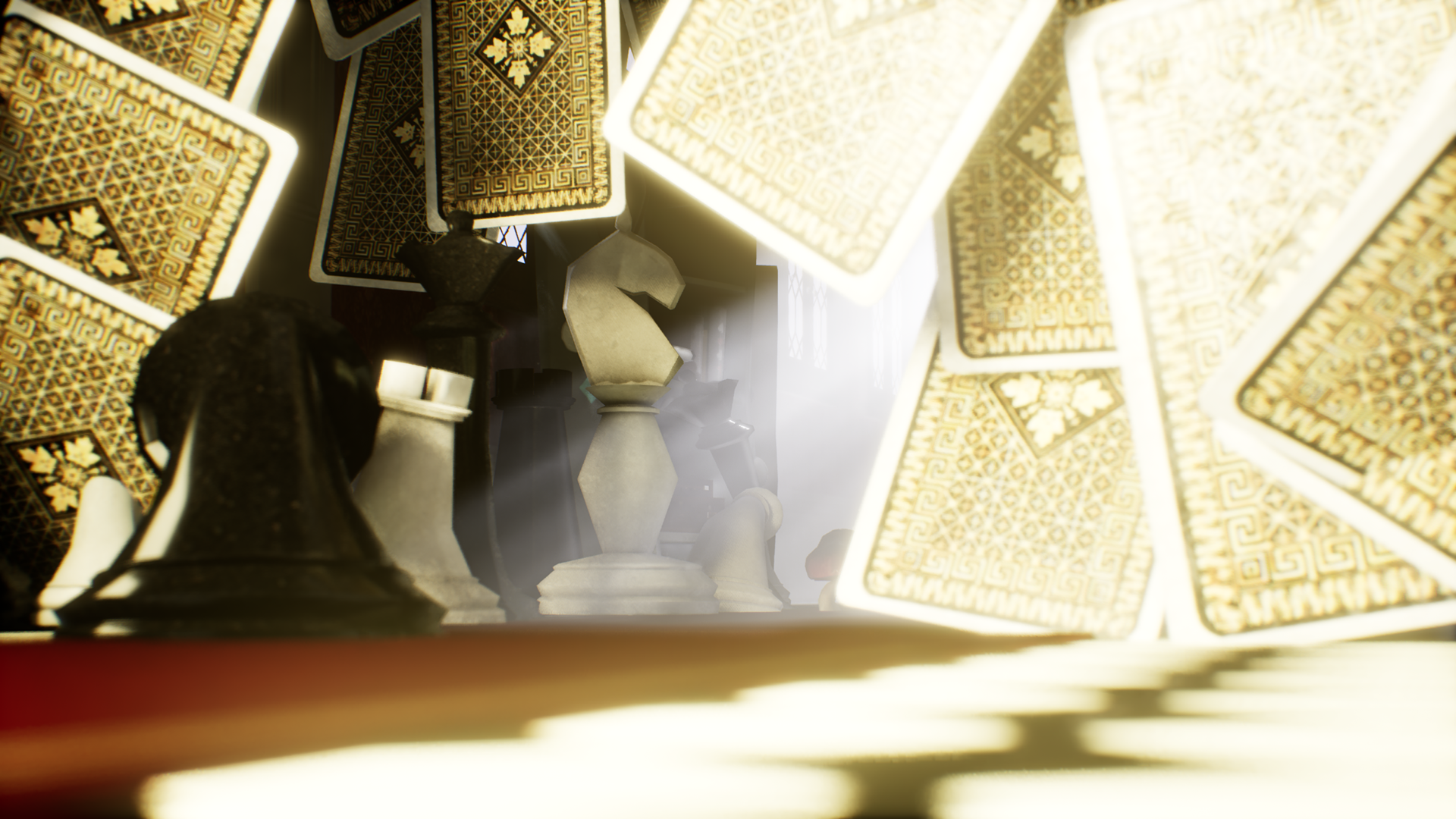

In the first iteration, I aimed for a dream-like, nearly seductive color tone to try matching the theme of Alice In Wonderland.

The use of dawn-time sunlight sets a glooming environment, where the under-exposed luminosity shows off the specular texture of our playing cards assets.

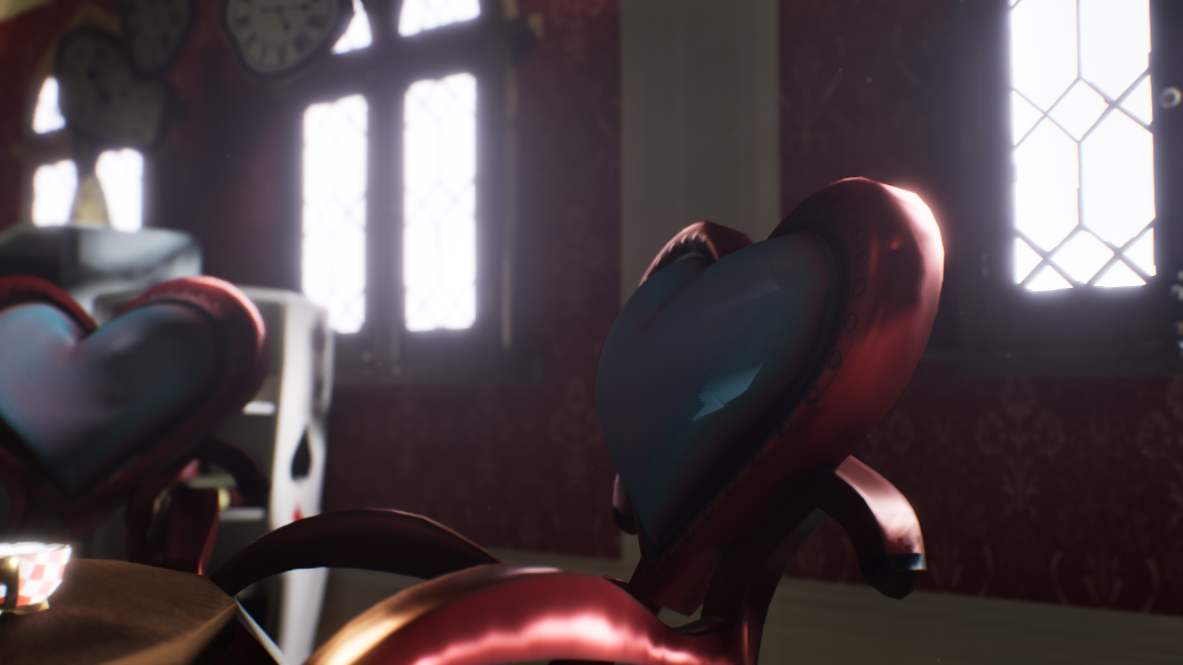

To light the furniture, I set off with the idea of using multiple small-source-radius point lights to highlight the glossiness of the surface, creating a sense of delicacy.

The idea of using god-rays (by volumetric fog) at the end of the hallway is meant to visually intrigue the viewer and adding mysteriousness.

After

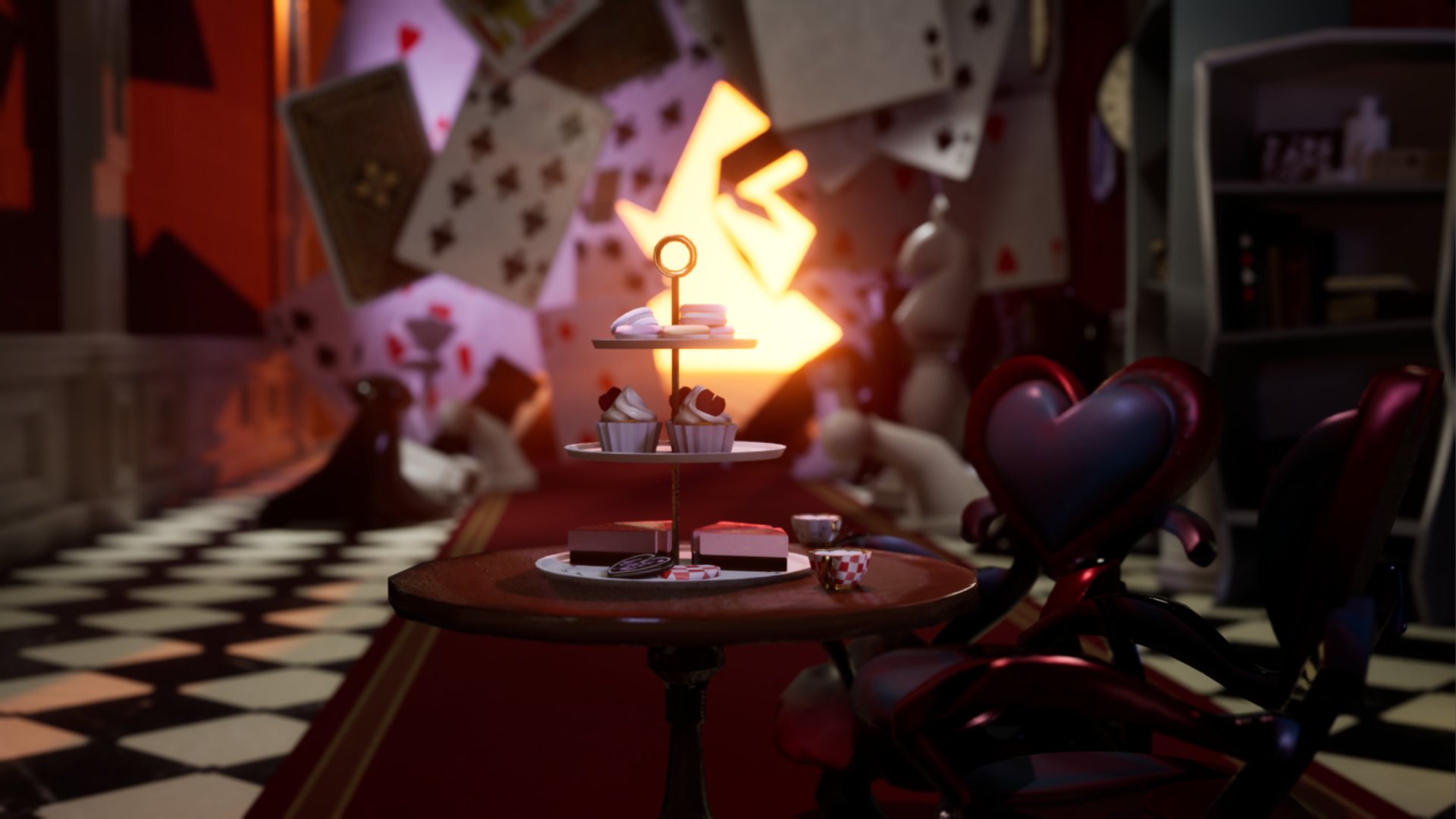

From the icvfx test shots, I found out that, despite the dramatic colorful lighting contrast, it felt not believable enough because it deviates too much from a typical color scheme from daily life.

Thus I switched the color choice as well as light staging to maintain a more physically practical environment lighting.

HDRI skybox and a sunlight with true-to-life physical parameters adds to the believability. While the indoor-outdoor warm-cool temperature contrast still maintains the visual interest.

Lighting Optimization

Metahuman Customization and Motion-Capture Pipeline

Coming Soon

Mesh to Metahuman, scan data clean-up

Democratizing Mo-cap Solutions.